Mark P. Dangelo: In an AI Reimagined Financial World, It Begins with the Consumer (Part 2)

Mark Dangelo currently serves as an independent innovation practitioner and advisor to private equity and VC funded firms. He has led diverse restructurings, MAD and transformations in more than 20 countries advising hundreds of firms ranging from Fortune 50 to startups. He is also Associate Director for Client Engagement with xLabs, adjunct professor with Case Western Reserve University, and author of five books on M&A and transformative innovation.

It seems the world has been completely captivated by the rise and potential of AI systems since the start of 2023. Startup funding investments have skyrocketed to over $40 billion in the last 12 months with critical focus on industry specific solutions that “ring-fence” proprietary, competitive, and consumer digital assets. However, as AI innovation represents the next phase of computing (i.e., reusable, data ideated solutions), what should be anticipated, designed, and adopted to promote BFSI consumer trust and satisfaction, while recognizing that AI is not 100% correct?

As noted in Part 1 of this series, (#AI) is undergoing a “halo and horns” rite-of-passage. It has propelled firms such as @Nvidia to a trillion-dollar valuation due to their AI chips, it is remaking delivery processes across industries, it is giving rise to greater financial inclusion (when utilizing ethically sourced data and training sets), and it is ensuring regulatory compliance and anticipation of “fit” thereby reducing costs and creating consumer trust. This is the #rationale for AI—the value, the “good”, and reasons for greater expansion and broad adoption. Rationale is encased in our business cases, operating models, KPI’s (key performance indicators), and ROI’s (return on investment).

Yet, there is the fear, uncertainty, and doubt (#FUD) of AI is raised by its creators (e.g., Sam Altman, Geoffrey Hinton, Kevin Scott, and others) as headlines warning about “… mitigating the risk of extinction from AI …” and comparing its active management to nuclear technologies and pandemic vigilance. Will these overt warnings stop corporations from AI adoption? Will it stop researchers and academics from creating even more powerful “laboratory” versions that deliver artificial consciousness and a sentient state?

These are just a few #implications of AI rationale—it is something that often is traditionally ignored in our rush to adopt new innovations and appear competitively relevant. The implications are frequently unrecognized and unaddressed (e.g., skills, digital maturity), which create root-cause failures surrounding AI sustainability, adapting to changing business needs (e.g., transparent, data-driven machine learning), and unintended consequences (e.g., downstream, or cascading impacts of synthetic data).

However, for AI to be sustainable a inclusive ecosystem of what “… constitutes a collection of mutually reinforcing guarantees of individual rights and of limitations …” is necessary across our AI solutions as they exponentially grow more robust and increasingly autonomous.

Is There a Critical Imperative Action or Starting Point for BFSI Organizations Embracing AI?

This is the data ecosystem stage set for AI within our financial and mortgage enterprise. The section above illustrates the complexity of industry discussions and models of operation that will shape every aspect of our delivery environment for the remainder of this decade—and we have not even touched the most opaque and critical set of unknowns. These unknown unknowns are represented by the cascading impacts and decisioning where one AI system feeds its outputs and decisions to one or more additional downstream AI systems propagating innovative outcomes. But, what is THE consumer question and implication for AI?

For enterprises, the question might be around how quick and accurate can these AI point-based innovations be incorporated into operational systems? For regulators and auditors, perhaps their interest is around understanding the decision-making process and underlying data (which might be synthetic) to ensure auditability and equitability? For politicians, perhaps it centers around the number and type of jobs that AI eliminates, creates, or impacts—and of course, the future of work models. All valid and important.

However, the question seldom asked (except by pockets of consumers and advocacy groups) is what happens when AI is wrong—and the outputs are fed into downstream systems? How can those systems be adjusted, their decisions reworked, and the damage they did be mitigated (using the same speed to correct as they did generating the cascading error in the first place)?

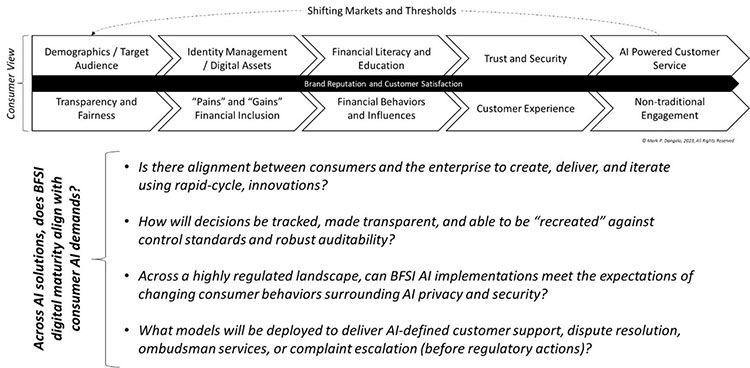

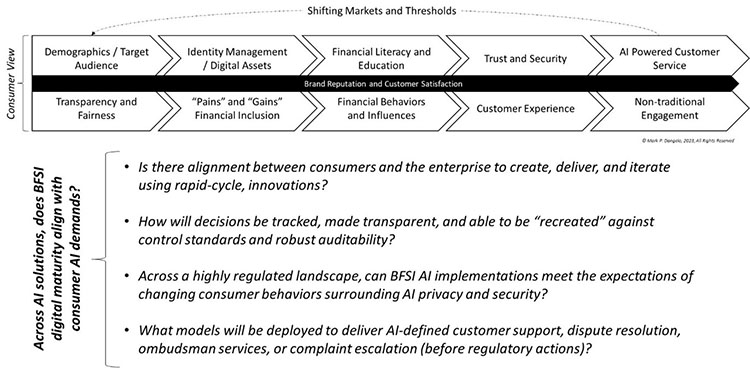

The following figure illustrates the likely segments and questions that will boundary AI for BFSI and mortgage enterprises seeking sustainable solutions.

As we now can see in Part 2 of this series, we will explore what is seldom discussed, researched, or “gamed” as part of the financial data supply chain in the quest to use AI for good (e.g., margins, competitiveness, inclusion). Indeed, AI represents a new computing era (BTW, there were five previous ones), but while we concentrate on the rationale behind it, those troublesome implications are why, when deploying AI in a reimagined digital financial ecosystem, AI begins with the consumer.

What is an AI Bill of Rights (AI-BOR) for Consumers Across Systems, Enterprise, and Industry?

We should recognize that AI represents a quantum shift of not just capability, but speed, complexity, skills, compliance, and most importantly, consumer engagement. Back to halos and horns, the ability to engage with the over 75 million unbanked and underbanked in the U.S. represents a halo potential for economic growth and for middle-class prosperity. For housing, lending, credit, and even financial cross-selling, AI has the potential to add trillions to the GDP of a county debating federal, municipal, student, and personal debt.

However, the horns of delivering consumer value within and across AI decisioning, while understanding which of the millions of potential data elements are applicable, remains the root-cause of the inaccurate outcomes. Remediation of outcomes are frequently beyond the organizational and skill level available for a fast resolution and lasting conclusion (e.g., how to ensure that the algorithms and learned outcomes can be adapted).

The rationale for adopting an AI-BOR will vary by sub-industry (e.g., retail, mortgage, equity, crypto), and so will the granular implications of implementations. However, there are consistent framework components that must be addressed with every AI system and intelligent RPA (robotic process automation) solution. These include:

• Transparency: When it is used, how does it impact or create customer experiences and outcomes?

• Privacy: How are individual digital assets being used, stored, shared, and protected? What auditable practices and processes are used to delete information—correct or in error?

• Fairness: Who determines (e.g., AI, human, or hybrid) if algorithms or training data is biased, discriminatory, or exclusionary (e.g., race, gender, ethnicity)?

• Auditability: Why and how are decisions made using AI—reasons, justifications, impacts, outputs, touchpoints, and recreation of outcomes?

• Accountability: What mechanisms are in place to address the above, while creating rapid systems of complaint or error remedies?

• Security: How does the enterprise—its systems, outsources, partners, and even supply chain firms—anticipate and guard against unauthorized data access, decision outcomes, and manipulation of results?

• Redress: Who is accountable for AI recourse? How will the incidents be addressed and corrected actions taken? Where does the consumer “approve” solution redress including the reissuance of AI data and all its downstream touchpoints and consequences?

The proactive design for AI-BOR should not be viewed as a nice-to-have checkbox. International, U.S. Federal, and state governments are all actively working on their individual, unique (i.e., not-integrated) solutions likely to result in an onerous, patchwork quilt of regulatory oversight and compliance rationale. For BFSI and mortgage leaders, the active integration of an AI-BOR across traditional transactional systems and mindsets aided by AI seems costly and uncertain.

However, in May 2023 a dozen state attorney general’s put JPMorgan on notice over its banking practices—speculated to be indirectly the result of intelligent RPA’s / AI enforcing regulations and practices (see “JPMorgan Targeted by Republican States Over Accusations of Religious Bias”, @WSJ, May 13, 2023). Will discovery prove the system decisions to be accurate, biased, or a result of cascading unintended decision making? More importantly, if automated decisions were used, can the data at the time be recreated, or will these “intelligent” data-driven solutions be unable to recreate outputs due to algorithmic or digital content changes? What hidden liabilities are rooted in today’s AI implementations?

Government Regulation Under Construction—Ready to Embrace Consumer AI Risk Uncertainty?

To reinforce confirmation of these overarching customer principles, released in October 2022 by the White House Office of Science and Technology Policy (OSTP), there are five building blocks, which represent multi-year developments and comments across consumers and businesses. These include (as extracted from public document available for download, see “Blueprint for an AI Bill of Rights: A Vision for Protecting Our Civil Rights in the Algorithmic Age”):

• Safe and Effective Systems: You should be protected from unsafe or ineffective systems.

• Algorithmic Discrimination Protections: You should not face discrimination by algorithms and systems should be used and designed in an equitable way.

• Data Privacy: You should be protected from abusive data practices via built-in protections and you should have agency over how data about you is used.

• Notice and Explanation: You should know that an automated system is being used and understand how and why it contributes to outcomes that impact you.

• Alternative Options: You should be able to opt out, where appropriate, and have access to a person who can quickly consider and remedy problems you encounter.

The above is just a starting point—and the U.S. Congress and state legislators are just now weighing in to capitalize on public concerns, media attention, and upcoming 2024 elections.

Once your enterprise has devised an AI-BOR, then what should you do with them? Putting this into the framework of the aforementioned method—rationale and implications—these AI-BOR’s represent the rationale. Now let’s address the implications of AI solutions where the consumer is the starting point.

Is there a Preparatory Framework to Repair AI Decisioning When it Generates Errors?

Today, AI systems are developing, but gaining in acceptance and capabilities. However, buried within the layers of complex algorithms underpinned by petabytes of (training and operating) data, AI transparency while growing is obfuscated by innovative advancements, transitory functional building blocks, and orchestration implementation methods that are not core competencies of traditional BFSI information technology (IT) staff—either in-house or consulting.

However, like AI-BOR rationale, the implication (s) of their adoption conforms to a convergence of methods to mitigate and correct AI errors. These include:

• Data Refinement: Manually or automatically identifying errors and correcting the data.

• Model Retraining: Correcting systemic issues within the model by updating or expanding datasets, adjusting architectures, or altering parameters.

• Active Feedback: Collecting human feedback to determine root-cause then update or retain model (s).

• Human Intervention: Manual post-production reviews that identify errors, classify situational analysis, and adjusts outcomes, while creating solutions to avoid reoccurrence.

BFSI leaders with an AI reimaged consumer approach also proactively have developed processes and outcomes to deal with errors.

• Predefined AI Customer Support: Customer contact personnel need training to know how to identify AI involvement and the procedures for tier escalation.

• Error Disputes: There must be processes and procedures advocating for consumers who identify AI errors and rapid-escalation solutions to mitigate AI cascading propagation. Recovery must revert back to the system-of-record to ensure data-driven replication is not possible.

• Escalation Processes: When identified or requested, AI solutions (if they are unique to given departments or personnel) must be rapidly escalated and monitored to ensure solutions are implemented at the customer level first—system level second.

• Ombudsman Services: AI at this stage, will require a unique customer dialogue and organizational importance beyond traditional “rule-based” legacy systems. Meaning, the damage and impacts to customer bases (and brand) can be rapid and devastating if not immediately addressed.

Taken together, the two categories form the ecosystem solutions to address AI errors and prevention. An illustration of the advanced methods and disciplines (i.e., the next-steps) using a consumer-focused approach for AI is shown below along with the impacts and targets of each technique. It is worth noting that these segments below are not mutually exclusive and may be layered and integrated.

The diagram above shows the landscape of solutions—but as we can quickly see, the underlying complexity will be beyond many traditional IT staff. Moreover, the investment in these advanced capabilities points to growing reliance on vendor or outside offerings. It will demand a new skill set within the enterprise that manages and layers AI solutions to achieve the operating and business results of the enterprise and the markets they serve. This “new” leadership skill is “#orchestration,” and it will become more valuable than the prior decade’s focus on data scientists.

In conclusion, AI systems at their simplest utilize data-driven decisions via algorithm (s), which are adaptable based on the data it has access to and ingests into its ecosystem. Yet when factoring consumer demands and expectations, AI definition, impacts, pains and gains need to be altered to reflect the customer realities.

As we have stated, what should consumers to do when AI is wrong? Which AI system are we using and how do they granularly impact consumer loyalty, profitability, trust, and brand recognition? What digital gateways are defined which feed systems-of-records (#SORs) connected to the AI systems—what are the recovery processes across the digital supply chain?

Finally, if we define the AI rationale, is the enterprise prepared for the implications of adoption—not to mention the continuous adaptability necessary to keep the layers of AI systems innovatively relevant and accurate.

AI is a comprehensively different approach to traditional, rule-defined system thinking. The mindset of AI is data-driven, data product building blocks that require an innovative approach to address this sixth generation of computing. Is your organization ready for the implications of consumer driven AI—beyond the hype of its rationale?

(Views expressed in this article do not necessarily reflect policy of the Mortgage Bankers Association, nor do they connote an MBA endorsement of a specific company, product or service. MBA NewsLink welcomes your submissions. Inquiries can be sent to Michael Tucker, editor, at mtucker@mba.org, or Anneliese Mahoney, editorial manager, at amahoney@mba.org).