Mark P. Dangelo: The Agenda Driving Leadership Data, Analytics and Hubs

Mark P. Dangelo is Chief Innovation Officer with Innovation Expertus, Cleveland, Ohio, responsible for leading and managing global experiential teams for business transformations, digital projects and innovation-based advisory services. He is also president of MPD Organizations LLC and an adjunct professor of graduate studies in innovation and entrepreneurship at John Carroll University. He is the author of five innovation books and numerous articles and a regular contributor to MBA NewsLink. He can be reached at mark@mpdangelo.com or at 440/725-9402.

Digital leadership principles now advocate “telling a story” to improve the internalization and action of events inside and outside the enterprise. From measuring marketing programs to conducting M&A integrations, storytelling has become a core competency to influence outcomes, while improving success rates. However, when it comes to rising oceans of data, what story do these disjointed floods of consumer and business activities forecast? Do you find that traditional business intelligence solutions and practices lead to long lead times, large capital investments and difficult adaptation?

With an explosion of tools now available (e.g., #Tableau, #GoodData, #Domino, #Dataiku) the question of what to measure, monitor, and highlight is firmly in the hands of end-user domain experts increasingly offered sophisticated analytical capabilities. No longer are the needs versus capabilities removed from the users and their iterative use cases. Results are measured in weeks—not months.

The Demands for Data D2I2 Hubs

As we have discussed during the last several months in prior strategy and innovation articles, 2022 promises to be a year of transition—mid-term elections, increased work-from-home (#WFH), and innovative technologies that will alter IT budgets for the remainder of the decade. As the six-Vs of data explode (i.e., volume, velocity, validation, value, variety, variability), so does the complexity of the tools available along with the correlation insights capable using advancing data sciences. Taken holistically, these advances create digital transformational demands and challenges few financial leaders are prepared to act upon for distinctive insights and lasting value creation.

It is a brave new world driven for everything digital—yet what are the effective leadership principles needed to identify trends, speed adoptions, and promote adaptation? How will these expansive marvels of results and predictive insights aid with customer growth and retention? Or do these traditional business intelligence dashboards create myopic focus that discourages risk taking and positive disruptive adaptations? In this age of digital, the demise of traditional visualizations and metrics is comprehensively disruptive especially with the explosion of low-code / no-code (#LC / #NC) capabilities that places the power of analysis into the hands of domain experts—not #IT scientists.

Moreover, the popularity of business dashboards, complex analytics, and voluminous data metrics have transformed business intelligence into innovative opportunities for c-levels to ask different questions. From the colors selected to the density of data to the types of charts to the goals served, yesterdays single purpose dashboards have evolved into layered, data delivery hubs—analogous to smart warehouses serving customer fulfillment purposes.

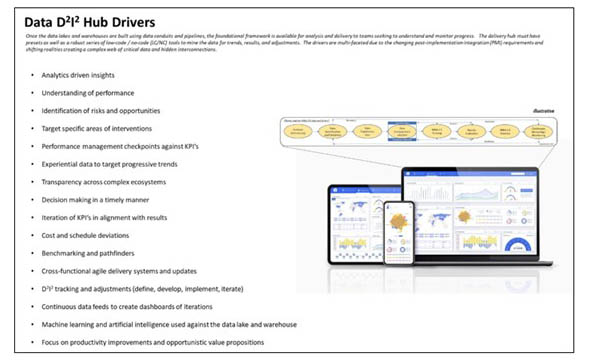

These delivery hubs herein known as data D2I2 hubs (#D2I2 – define, develop, implement, iterate)represent an adaptation of traditional business intelligence offerings utilizing the empowerment of domain employees. As illustrated in the following graphic, data D2I2 hubs are changing the stories told, the segmentations of purpose, and the value analysis concluded against the demands of data governance and curation.

As financial services firms face disruptive innovations wielded by atypical competitors, the leverage of data using analytical and measurement hubs will be a top agenda item for c-level leadership for the remainder of the decade. Armed with lakes and warehouses of data, leaders need to embrace the continuous change as driven by changing stories, purposes and most importantly, opportunities.

The Story

Depending on the goals for the data D2I2 hubs—tactical, strategic, event driven, or performance—the story surrounding the data, metrics, and KPI’s fills in the narrative making the outcome either fictional or non-fictional. However, the story continually evolves with the outcomes achieved and the leadership capabilities used while framing the digital demands and anticipated actions (i.e., what was non-fictional today could be fictional tomorrow).

Indeed, data is the glue and the fuel for data hubs. Data is the reality that begins with the consumer, encompasses the systems of record, and is expanded by third-party relationships across growing lakes and warehouses of transactions and metrics identifying behaviors and opportunities. When it comes to financial services, the digital demands and complex analysis increases with every loan, innovative offering, and payment opportunity. When it comes to lending, it represents the foundation for ML and AI allowing predictions to be reached with precise accuracy against a continually changing set of variables and risks.

Yet the more data we assemble within our unstructured data lakes and structured data warehouses, the more unanswered questions we generate. The seemingly moronic question of “why is data valuable” has never been as opaque as it is now due to the expansion of the six-Vs and the stampede to embrace machine learning (#ML), computational intelligence (#CI), and synthetic intellect (#SI or #AI) all built across patterns of anticipated and experiential knowledge.

Storytelling with data, achieving effective layouts, and projecting potential outcomes using advanced technologies takes many forms. And while many of these variables of how to represent the story come down to effectiveness versus personal preferences, the core determination of what “is” the story begins with the data, its governance, and its curation. Additionally, it is worth noting that telling the story, identifying trends, and making intelligent decisions is not just about real-time data. Like any other decision-making process, if we as financial leaders use only real time data, we greatly increase our risks of embracing change due to one-off timeboxes that could alter the investments, customer offerings and innovative solution sets.

The Purpose

While a foundational purpose of storytelling is to provide a means to “make sense” of these expanding oceans of data, we cannot ignore other critical principles which must be addressed to promote integrity and auditability of our analysis. These global principles and design purposes include:

- Transparency of data made possible by robust and iterative data governance and curation. Multiple sourcing and vetting will ensure that outlier data cannot improperly influence the decision outcomes as well providing fully chain-of-custody security and privacy.

- Risk analysis with bounded limits on applicability—meaning risks are relevant for a given set of parameters and performance results or criteria. Risks extrapolated with phase-shifted data can be worse than having no data insights—that is, a little knowledge projected as a truism.

- Iteration or tracking of progression is required to not only show causality, but progression. Adopting the auditing and journaling practices common with traditional systems-of-record, confidence and accountability can be achieved quickly and with verifiable objectivity.

- Adopting continuous delivery behaviors achieved using adaptable data pipelines to expand capabilities, but also to improve the statistical confidence intervals of decisions. Effective leaders should be adopting approaches found in the growing body of practices surrounding enterprise machine learning operations (#MLOps) that promoted continuous integration (#CI) and continuous delivery (#CD).

- As security and privacy move away from exposure to end-to-end integrity, the expansion of the six-V’s is creating a perfect storm for data usage across analytical solution sets. With growing sophistication, black hat actors can negatively influence decision making outcomes by interjecting causality metrics that when integrated across data supply chains leads to downstream consequences few enterprises are prepared to address or mitigate.

The creation of data D2I2 hubs may start with understanding the story or the outcomes desired, but in our zeal to achieve those outcomes using opportunities the complex realities of how to arrive at structured, effective, and iterative solutions is not merely arming domain experts with tools and LC/NC training. For the 2022-2025 c-level agendas, critical competencies and skills must be used to envelop data that will be not only competitive differentiators, but comprehensively disruptive.

In the end, IT leaders and their third-party partnerships will become less “vendor-like” and more internal consultants offering a plethora of capabilities all floating on top of vast data oceans, warehouses, and lakes.

Why? To meet the challenges brough forth by business leaders and to capitalize on the rapid-cycle and often conflicting opportunities. No where is this more evident than in financial services where non-traditional competitors are often existing partners who were once part of the consumer supply chains—yet who now control the emerging outcomes with actionable data and insights against commoditization and disintermediation dominating current offerings pushed by brand names.

The Opportunity

The implementation of D2I2 hubs as part of c-level agenda’s is moving beyond the snapshot of metrics and KPI’s now incorporated within the next generation of business innovations—ML, CI, and AI. With intelligent solutions now leveraging traditional system-of-record offerings and their transactional data stores, the opportunities presented to innovative leaders are on the rise. The capabilities and investments in intelligence offerings has also coincided with advancements in delivering insights directly to consumers they way they want to experience them (e.g., form factors, frequency, visualizations, impacts).

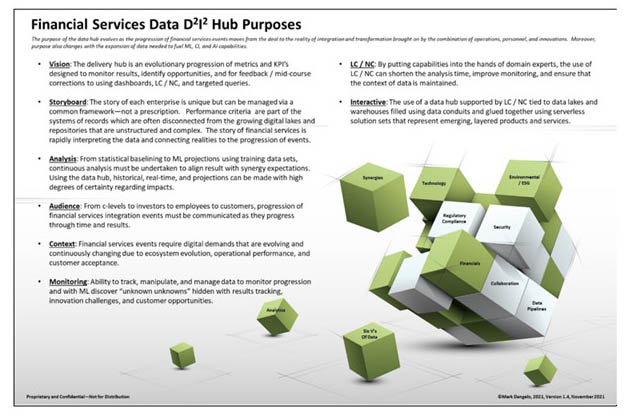

This convergence of purpose, technology, and demand gives critical importance to utilization of data hubs that are both fixed and variable in their content. The following graphic highlights the demands of these “hub purposes” highlighting accepted foundations but also atypical implementation and governance requirements.

It is noteworthy that opportunity recognition and pursuit is no longer about following a proven, strong-willed pathfinder personality that was common across industries prior to these advancements. The effective use of data D2I2 hubs as framed can provide the enablement and empathy of offerings that have eluded smaller banks and financial institutions—it can represent a critical shift in mindset, approach and delivery system which levels the playing field while providing scale and adaptability.

As 2022 arrives, those c-levels that modify their agendas to incorporate data hubs as framed may experience gains well above their peers—and in the end isn’t that why we budget for initiatives?

(Views expressed in this article do not necessarily reflect policy of the Mortgage Bankers Association, nor do they connote an MBA endorsement of a specific company, product or service. MBA NewsLink welcomes your submissions. Inquiries can be sent to Mike Sorohan, editor, at msorohan@mba.org; or Michael Tucker, editorial manager, at mtucker@mba.org.)