Mark Dangelo: Data Stacks—a 25-Year Journey that Began with MISMO

Traditional industry approaches to efficiency have been focused on Fintech and Regtech offerings—incremental approaches to address an opportunity or problem.

In a dialogue with MISMO President David Coleman, this article examines the technological advancements that have broken the application investment methods and exposed the inefficiencies of building and investing in solutions built on data replication, ETL / ELT, and non-standardized APIs.

With the world focused on the upcoming presidential election, the mortgage industry is undergoing its own “watershed” series of transformations impacting regulatory landscapes, market dynamics, and of course, technological advancements. Focusing on the last category, industry leaders typically highlight AI (artificial intelligence), data, and skills as enablers to future compliance and market opportunities. However, maybe the most critical of all the evolving innovations and advancements within the industry revolve around a 40-year challenge—data.

Still today, data is the most common yet misunderstood set of challenges and opportunities facing an industry trying to determine a) where to invest their capital dollars, b) what cloud (i.e., non-premise) solutions to adopt, and c) how all the siloed applications with their own tightly coupled data can be used to deploy event-driven AI capabilities.

Because of traditional application approaches, the terminology utilized to model the inherent modern-day data complexities and its continuous management tends to make for unfamiliar, esoteric industry discussions. Applications are familiar, and easily internalized especially when considering incremental improvements or operational efficiencies. From traditional industry approaches, data products without an application (mindset) are analogous to creating a home foundation without any blueprints on what to build upon it.

It is this transformative unfamiliarity, which often makes data-driven discussions appear like academic theory or intellectual time sinks. Yet, across technology disciplines, beyond industry events, and within research enclaves the focus is transfixed on data, stacks of data linked together. Standardization of elements and interoperability are being used as building blocks (e.g., MISMO) for complex reuse, governance, and efficiencies well beyond the borders of their original principles of intent.

Beyond Standards

Within industry bodies and associations, standards were once considered an end-state. Today, standards are viewed as a glue to bind and encapsulate behaviors, contexts, and lifecycles associated with discrete elements—like a sovereign currency or token of value. Some ascertain that data in its lowest forms of reuse can be ethically traded and manipulated all linked to the original system-of-record (i.e., non-replicated, non-ETL / ELT).

Historically, applying standardization to siloed data within application boundaries met significant rework, constant replication, and expensive reconciliation—if this was done at all given the use cases and business models. Currently with low volumes, limited supply, and unfavorable affordability housing leaders are faced with limited “free” cash to invest in new, digitally native solutions. It is a catch-22 that can only be broken with a fundamental data-driven shift to harness vast amounts of natural and synthetic data to drive innovation, improve decision making, and stay competitive.

“As with anything in the mortgage industry, change takes time,” continues David Coleman. “I was an early industry adopter in the move to data (vs. information). As an example, when I launched the concept of integration (between LOSs and DU) prior to MISMO’s creation, data was really text. System data was gathered as text strings to be printed, not to be used for downstream systems or processes.”

Moreover, unlike prior applications and their provisioning processes (e.g., Fintech, Regtech, ROI, IRR’s), data has become its own product solution for cross-domain sources. Increasingly data has application independent value, it can be “traded”, and it can be part of new RevOps (revenue operations) business models especially for AI applications seeking common, audited data lineage linked to the most up to date (i.e., event-driven) information.

To understand the “why” leaders should invest in unique data-driven solutions foundationally begins with the implementation of adaptable (structured and unstructured) data standards. And for those who believe that (data) standards inhibit innovation, industry leaders need to place into architectural context of how standards deliver the starting point of a robust, adaptable data architecture stack that transcends technology. This is especially true for emerging AI capabilities which are measured in a lifecycle of weeks and months, not years.

The Lift-and-Shift of Standards Against Technological Innovation

In 1999, MISMO started a journey to create common data definitions, structures, formats, and quality. The purpose was straightforward—interoperability, consistency, and error-reduction. Today, it has adapted to complex data demands against an explosion of innovative applications leading to advanced requirements surrounding security, privacy, metadata, lifecycle governance, and more.

Throughout this evolutionary process of delivering multimodal data across applications and functional silos, the principles of supplying data as a containerized asset within a process workflow shifted. The shift was both subtle and explosive with the inclusion of new data types created by Fintech offerings and non-structured data (i.e., big data, data marts).

To add additional chaos into the data explosion and the shift of corporate priorities—application versus data—SaaS and cloud offerings comprehensively transformed the discussion of how to provision software, where to store data, and how to integrate it across platforms. Finally, these last two years have witnessed yet another comprehensive reprioritization—artificial intelligence.

However, regardless of a desire to return to an application focus with encapsulated data management, the change to data ideation first is permanent. For many within the industry, there is a frustration surrounding the impacts, yet is also a once in a generational opportunity to move beyond transactional mindsets and designs, while leveling the playing field for both large and small bankers.

This ideation shift started a decade prior, but the explosion of AI offerings—both large and small—has sealed the transition from traditional application centric to modern data-centric designs and delivery. Coleman also states, “Today, (Gen) AI supports a new way of thinking, and it requires data—lots of it—to make it successful. The key success factor for AI is that the data is reliable – structured, consistent, standardized – the MISMO data model. (Gen) AI allows the decoupling between process flows from its data thereby creating and delivering reusable building blocks regardless of the application.”

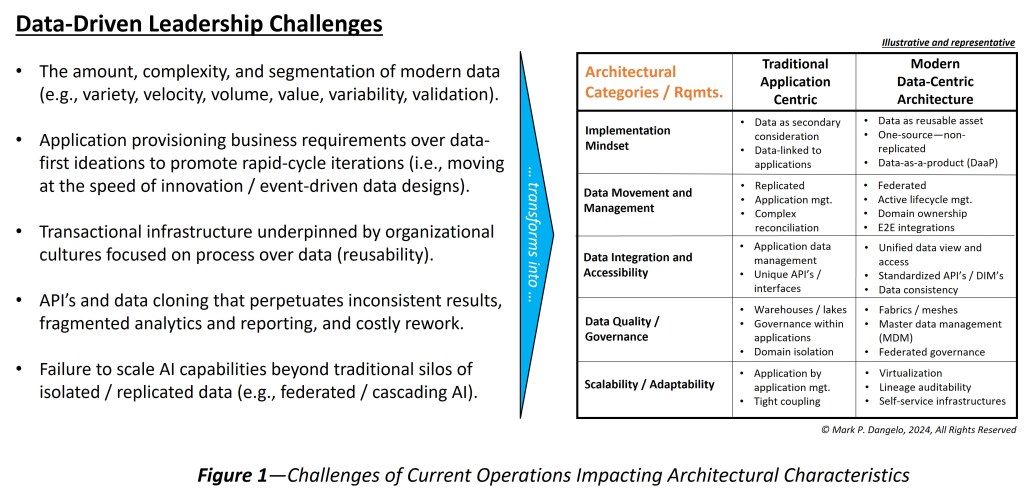

Twenty-five years ago, the original MISMO charter could not have predicted the shift, let alone the technical, organizational, and leadership challenges that are reimaging the mortgage industry as its markets, consumers, products, and services also reel from continuous digital innovations and behaviors. In Figure 1 below, the illustrative challenges showcase the shifts and their impacts on architectural elements within the modern data-centric approach contrasted against tradition.

Yet, what do these shifts mean? What are the calls-to-action initiatives that can be prioritized? More importantly, what skills and iterative solutions can be created to deliver against a roadmap of solutions, which most efficiently transforms the operations from process to data-driven?

The First Step into a Next-Gen Journey

When it comes to the operational and financial impacts of standards, their contribution to transactional value is accepted even when considering multiple version maintenance and mapping. With respect to data as a product (DaaP) as a priority over transactional implementation, the discussions and debates become opaque and circular due to fundamental shifts of culture, operating mindsets, and traditional system implementation strategies.

To fully understand the foundational distinctions of what is needed and coming because of AI’s advancements, is that data represents the rationale behind auditable algorithms. If the data is suspect, corrupt, or error prone, then the output from even the most advanced AI algorithms will be the same. Moreover, the data outputs and the synthetic data used for training and production will feed additional downstream AI systems thereby propagating anomalies and creating chaos across systems all faster and with greater impacts than traditional applications.

To reinforce the fundamental transformations taking place with mortgage and cross-industry data, “MISMO is making a fundamental shift away from defined transactional methods, and is instead focusing again on data, while at the same time beginning to roll out tools to the membership to translate data into any format desired – XML, JSON, etc.,” Coleman affirms. “No longer will MISMO try to dictate or otherwise support a specific technology, and again, will focus on data. Now, the challenge of providing quality data will remain, however the need to adopt a particular technology or methodology is being removed.”

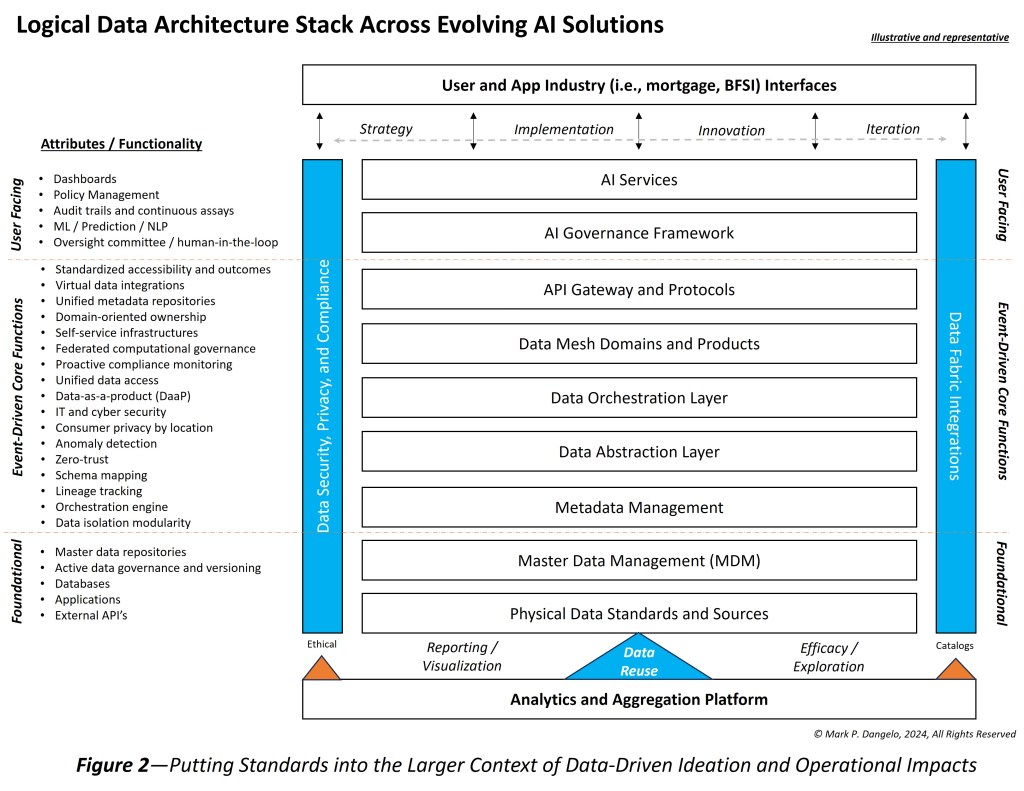

Bringing these concepts together, we arrive at Figure 2, which illustrates the minimal data layers, representative toolsets, and operational principles that must be holistically assembled for future industry relevance. What is immediately recognizable is that many of these technologies are already within the organization and industry solutions either implicitly or explicitly. However, without commonality across the applications and precise context of data usage, these technologies can become expensive, burdensome, and innovation inhibitors beyond the initial implementations (i.e., the maintenance lifecycle costs).

Figure 2 also illustrates the inherent complexity and unfamiliarity addressed at the start of this article. Yet, it turns out that creating data layers, rationalizing application data access, and streamlining inefficient duplication used by a web of industry applications yields both short- and long-term returns. These returns are further amplified when considering the explosive use of data-driven AI to make decisions and create efficiencies.

Actionable Steps

To utilize the above framework, the call-to-action implementation efforts begin with the following:

Embrace domain data management: Decentralized data ownership that links to data meshes, teams, and data products including their SLA’s and lifecycles.

Standardize and streamline data integrations: Using data fabrics and common security and privacy workflows and rules, implement data layers from physical to abstraction that create intuitive user access with a unified view of data across organizational elements and domains.

Incorporate automated data quality and compliance: Implement active data management and AI governance solutions that incorporate layered tools, which provide common APIs to applications and users.

Establish and Iterate a Data-Driven Culture: For adoption and continuous improvement, utilize advanced, predictive analytics and strategies to improve decision making, adjust data sources, and provide auditable ML / AI sources and uses (e.g., lineage, context, and catalogs).

Design and Build Resilient Data Architecture (Stacks): Using the above, create orchestration and event-driven abstraction layers that enforce integrity, workflows, contextualization, and auditability.

From poets to business leaders to even Biblical scholars, for millennia passages have been written about the “foolish” person building their structures on foundations that cannot withstand the torrents and chaos of the elements that surround it. Today, we find that due to the pace of change, due to the struggles of isolated data silos, and due to the rapid-cycle advancements of AI, it would be foolish to continue to build on the application ideations of the last 40 years.

Data, driven by standards and technologies formed over the last quarter of a century, has become the new foundation. To build intelligent, digital processes on top of these data pillars, the use of data stacks provides the design and adaptability needed to leverage investments, improve margins, and the lineage necessary for event-driven, linked AI solutions.

As AI grows smaller in its design and focus, the ability to leverage “ring-fenced” industry, customer, and corporate data becomes an imperative with limited time to implement. The use of a data layer architecture delivers unmatched year-over-year value.

In conclusion, can you imagine today trying to communicate across a mortgage or financial industry segment that lacked any standards? Can you imagine data interoperability across the 80+ macro processes comprising origination, servicing, and secondary markets if standards were not present? What would happen if standards did not evolve with the data?

A similar parallel can be drawn with advancing AI solutions. Can you imagine a world of segmented AI all trained on different data? How will results be audited, reconciled, and reported (e.g., compliance)? What version of the “truth” regardless of the speed of delivery is valid, fed into other systems, and will stand up under regulator (or partner) scrutiny?

Moving forward the industry challenge is to transform itself once again, but this time utilizing design principles that have evolved over 25 years with the movement from digitized paper-based systems to native data-centric designs. Time will tell if the industry can move beyond the traditional, transactional data mindset to a one that is built on a data stack foundation regardless of the processes demanded.

Coleman provides an example of how data coupled with emerging and enabling technologies (e.g., AI) could be used by large and small organizations, “What if the homebuyer could just answer just a few questions. 1) What do you want to do? Buy? Refi? 2) What are the terms? Who are you? What is the transaction? In other words: Hi, I am David Coleman. I want to buy the house on 123 Main Street for $750,000. I want to put $200,000 down. What are my options? OK, great. I’ll take the 30-year at 7.55%. Then the AI Engine goes and does it all. It understands the implied processes and where to source the data including all relevant disclosures.”

To make this a reality, these intelligent systems demand data lineage and consistency that transcends traditional application-centric designs. Holistically the methods, tools, and designs are available for the industry all started on the demanding work that began 25 years ago. Will data-centric investments become the norm, or will industry leaders adhere to their tradition of investment and improvement?