Mark Dangelo on AI for Mortgage: ‘Do or Do Not. There is No Try’

As AI moves from hype to layers of solutions all defined by data, the belief that using familiar approaches will create outsized results is a false narrative. AI requires a holistic design and implementation that may look familiar—until you begin to integrate and scale. Apple just created AI consumer “legitimacy.”

Historically, the mortgage industry during the summer months racks up their largest volumes, their margins against the slower seasons, and the “nest egg” for operational improvements. To state the obvious, the taming of inflation utilizing interest rates against continually rising home prices has frozen many buyers out of the markets.

With 2023 touching 40-year lending lows (<$1.5 trillion), the arrival of innovative technologies and capabilities—e.g., (gen) AI, AIaaS, DaaP—the imperatives for continuous operational improvements have never been greater especially with revised 2024 lending estimates just touching $1.8 trillion. Indeed, the markets are showing signs of a bounce, but is it a false positive? Will the bounce only benefit those larger originators or servicers? Will any recovery be uneven, forcing IMB’s from the markets?

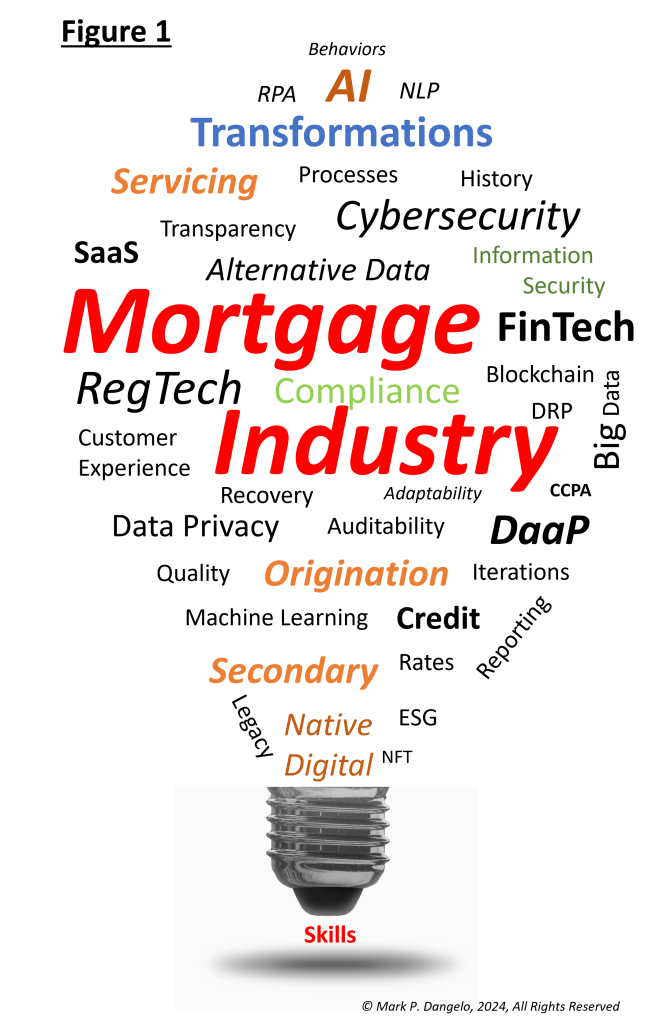

Researchers, vendors, and advisors are running their updated scenarios as we approach mid-2024. Will they, as many have done for the last 18 months, push their future predictions onto the darling of business leaders and technologists that has been 40 years in development—artificial intelligence (AI)? If they do recommend ‘intelligent” decisioning based on standardized, qualified, and alternative data, what organizational, process, and personnel challenges must be overcome? Will tackling one area or solution (see Figure 1) provide value by itself?

As we all are acutely aware, the mortgage markets moving forward are far more than technology. The changes fundamentally begin with the data, vast, and alternative (not within the enterprise or even industry) data linked together in conceptual meshes or fabrics. This approach alters the IT and application provisioning to data as the foundation—not a fragmented or siloed repository or mart.

For data-defined AI capabilities, the data first ideation is critical as AI becomes more robust and “cascades” between processes and systems like rain feeding a waterfall, feeding a river, feeding other systems. The data is the rain as AI feed AI feeding other complex “federated” AI’s, the industry idea of how applications are defined will permanently and quickly altered.

Referencing Figure 1 again, we can intuitively understand the vast rationale, complexity, and requirements between each of these identifiers. Typically the industry and researchers solved for one element at a time—that is not the future of AI. The watershed moment will likely be the Apple consumer focus, which will alter behaviors for billions of users to “accept” data-driven AI and its linkages with countless vendors. Yet there is a payoff and risk—a permanent, fundamental shift—for all industry participants that offers the unique value proposition to compete now or at the beginning of a next-gen data and consumer shift.

Yes, AI will be particularly important. Yes, data, data-as-a-product, is critical with solutions like master data management (MDM) / AI governance becoming easier and intuitive. Yes, technology will enable the enterprise to be efficient and adaptable. However, none of these marvelous advancements will have more than a fleeting impact on margins and market share if not “plugged” into skills—do you have them, how will you get them, and how will you retain them?

People, their capabilities, and their skills are what powers AI adoption and adaptability within the enterprise beyond the last 18 months of piloted investments. Skills are like AI electricity.

At this point you may be asking, “This is great, I understand the many variables involved in future markets, but how can I, my teams, my vendors, and my researchers move forward without budgets that are fantastical? What should we be asking to zero in on a call-to-action?”

Let review a few of the illustrative questions that need to be asked, answered, and integrated beyond the prescriptive one-size-fits-all advice found in literature. The Tier 1 questions for your efforts to measure any future change and investments would be:

What improvements do you need to make based on history and future requirements?

-Customers

-Competitors

-Regulators

-Vendors

Are there priorities and dependencies that must be addressed?

What timeframes will be necessary?

Are there unknowns or risks that come with the identification of future operating models, and can they be divided into roadmaps (like Legos or building blocks)?

How will operational models and success be determined—what are the measures, and will they remain fixed, or alter with progressive results?

What type of architectures or componentizations may be required to deliver on the above—cloud, mixed, federated, fabrics, meshes, marts, et al?

Where do the Tier 1 demands intersect with the rising intelligent decision-making offerings and future ecosystems? What core components, competencies, and compartmentalizations will be implied when adopted from the rationale?

These initial, representative Tier 1 questions create the outline of the “future state” necessary to understand where, in the evolving and rapidly changing mortgage industry model, the value that you need to deliver to be relevant, to be innovative, and to yes, survive.

Next, it is necessary to objectively assess against the above questions, what is the SWOT (i.e., strengths, weaknesses, opportunities, threats) of current designs in place today? This type of assessment is different that the traditional ideas of current state to future state mapping.

The introduction of different ideation processes—application versus data—shifts the value and assessment criteria to unfamiliar methods and techniques especially as the mortgage industry moves to layers of touchpoints versus a transactional mindset. For the history of mortgage processing (loosely excluding warehouse and mart constructions) application centric thinking often encased within departments has dominated software and hardware provisioning—even SaaS / cloud for the last decade.

Therefore, the Tier 2 focus will be less on the process aspects and a deep dive into data, data reuse, and data productization. A few starter questions would be:

What cultural characteristics do we have and how rapidly can we transform? Start with a basic “t-column” to identify categories and then move into a SWOT by groupings. Data is only valuable if it is reused and shared at a granular level complete with operational rules.

Where do our current applications align with the Tier 1 needs and where do they diverge? (More on this when we get into Tier 3 / transformational planning.)

What data (usually defined within an internal application or FinTech application) is available? What type of data is available—metadata, structured, unstructured, alternative / third party—and has it been ethically sourced?

What level of support, understanding, automation, third-party capabilities are available to assist with granular representation of applications, technologies, and the data within? Is this a “black box” or are the skills and MDM designs within our employee roster?

What integration challenges consistently impact customers, efficiencies, margins, and upstream and downstream collaborations?

What infrastructural benefits are positive—which ones are negatives?

These Tier 2 questions begin to establish the baseline of operations from all common taxonomies—people, process, technology, applications, data, and even, outsourcing. The idea with objectively addressing Tier 1 and 2 questions is that leadership and transition teams can understand clearly where the organization needs to go as well as critique where they are today.

With these questions and their answers representing both ends of a continuum, now the arduous work begins—how to move the organization, its customers, and its partners from today and firmly into tomorrow. How can this be done while also operating a “going concern?” In this era of “everything AI,” this gap analysis linked by agile, transformation plans-of-attack are the engines to achieve results.

However, what derails most of these efforts—AI or traditional—is that both ends identified in the questions move (i.e., they are not steady state). No organization stays stagnant while change is made. No industry fails to adapt. So, as AI is in its embryonic stages, mortgage leaders that march to a plan derived once along the Tier 1 and 2 outcomes will have results that only made sense for the timeframe where they were created.

Historically, this always has been a challenge for in-house defined and driven initiatives—it could be fatal if these methods are rigidly implemented with AI and its underlying data hyper-advancements (e.g., LLM’s, SLM’s, ALM’s, virtualizations, etc.). Indeed, the explosion of AI capabilities is very promising. With the hype stage now yielding to production capable, fully scalable data-driven solutions, AI may be the means (using the illustrative approach above) for IMBs, small, and medium industry participants to change their market impacts and valuation from the last three decades.

The approach outlined above to adopt AI is similar, but in practice it is quite different from existing models and architectures. Contrary to AI replacing humans, never has an individual’s experience mattered so much—yet discounted so greatly. AI, “do or do not (thank you Yoda),” as part of a step-function change of industry innovation has never been so great—it remains to be seen who will pivot, and who will assume it is just like tradition in a new wrapper.

(Views expressed in this article do not necessarily reflect policies of the Mortgage Bankers Association, nor do they connote an MBA endorsement of a specific company, product or service. MBA NewsLink welcomes your submissions. Inquiries can be sent to Editor Michael Tucker or Editorial Manager Anneliese Mahoney.)